For decades, the accepted measure of success for traditional design validation testing has been “parity or better.” Many in the consumer packaged goods (CPG) industry assume this means a given design is “equal to” or “as good as” its predecessor or competitor—which is understandable, since that is the dictionary definition of the word.

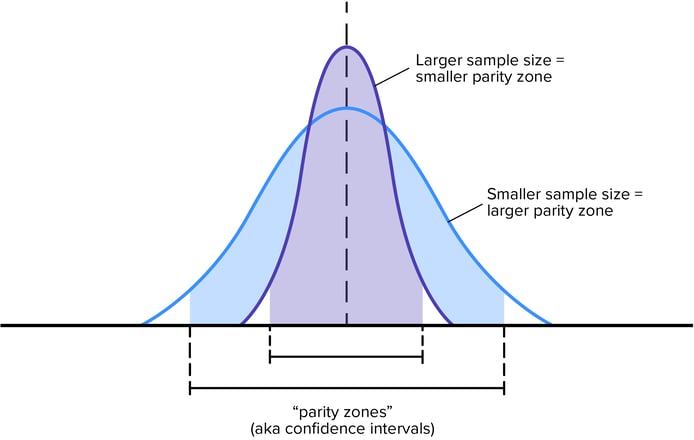

The problem (or, at least, one of the problems) is that’s not what it means in the context of design validation. Parity simply means that the sample size is too small to have confidence that the new design’s performance is better, worse, or exactly the same.

In other words, not only does it not mean the design is “as good as”—it means the research firm doesn’t know which design is actually better. After months of work and considerable expense, parity means the result of your design testing is conclusively… inconclusive.

How did what amounts to a very expensive shrug become the measure of package design success? Essentially, misconceptions and misgivings have become entrenched and are perpetually reinforced, in part due to a lack of a robust alternative (until now—but we’ll get to that). It starts with:

An underappreciation of design’s importance. Roughly half of redesign initiatives are followed by business losses, which over time has convinced many in the industry that design lacks commercial impact. Traditional validation testing—which is considered de rigueur for discerning CPG brands—has done little to change the failure rate, which has merely solidified the misperception that design plays a minor role in outcomes relative to the rest of the marketing mix. This factor is certainly not helped by the…

High frequency of parity results in design testing. Traditional validation testing arrives at a parity result a vast majority of the time. The reason for this is both mathematical and methodological. From a statistical point of view, studies with small sample sizes (100 or fewer) require large measured differences to confidently say the difference is likely real.

On the methodology side, if a study doesn’t engage consumers for honest answers or produces compressed data points (via pre-exposure distortion, monadic approaches, and scale-use compression) then the quality of data can be considerably lower. To put it more colloquially: This is the garbage-in-garbage-out problem.

Given the preponderance of the parity results, though, brands adopt a “this is just the way it is” mindset—the outcome is so common that it becomes accepted as the norm. And it doesn’t help that there is…

An unattractive alternative: Traditionally, quantitative validation testing is done at the end of a creative process that, up until then, was largely driven by subjective, qualitative assessments used to guide selection of the design route. So by the time a brand gets any data, it’s generally too late to make any major changes to the creative if the result isn’t positive.

This means there is an incentive to interpret “parity or better” as success, because no one wants to spend even more time, energy, and money going back to the proverbial drawing board—especially considering the prevailing opinion is that design won’t have an effect on business outcomes. This all leads to a faulty, corrosive conclusion that…

Design testing is just a “disaster check.” The lack of faith in design as a growth driver, the prevalence of parity results, and the unappealing idea of starting over has led many in the industry to regard late-stage validation testing as simply a “disaster check”—essentially just making sure the design won’t do major damage to the brand.

In this environment, a parity result has become an acceptable action standard because it at least portends a less-than-calamitous outcome (which doesn’t exactly indicate confidence in a design). This approach completely precludes capitalizing on the upside of design: If brands did more testing earlier, they'd be able to see which design could actually drive sales, rather than just trying to avoid an abject failure. In the parlance of the sports world: Brands are not playing to win, they’re just trying not to lose.

All of this sustains the high in-market failure rate for new designs, which only perpetuates the self-fulfilling cycle of design as a risk to be managed rather than a growth-driver to be unleashed.

Not anymore, though.

Designalytics completely transformed design testing using a better approach, robust metrics, and most importantly, the highest quality data in the industry. Why is our system such a substantial upgrade over traditional validation?

We’re highly decisive. Due in part to our larger sample sizes and forced-choice methodologies, Designalytics’ metrics make clear positive or negative assessments (rather than parity) 80% of the time, while traditional validation testing does so less than half the time. It’s hard to overestimate the impact this can have—brands can proceed with more confidence, more often. There's less equivocation in the process and more assertive action.

We’re also highly predictive. Our empirically-validated design performance measurement tools can be used to test pre-market designs with higher than 90% predictive reliability of whether the redesign will outperform its predecessor in sales. No one else in the industry can make this claim, and it only buttresses the confidence mentioned above. Clarity is a powerful catalyst.

We’re agile and affordable. Not only is our turnkey system highly decisive and predictive, it is also easier to implement, returns results faster, and allows brands to be more nimble. If a pre-market design falls short of a decisive win, Designalytics provides the diagnostic insights you need to improve performance in a subsequent round (which you can afford to do because our tool won’t exhaust your testing budget).

“Parity or better” has survived as a measurement of success largely because of inertia and the lack of a clearly better alternative. The latter is no longer true: Designalytics can objectively illuminate the potential for design-driven growth using an efficient, reliable, and highly predictive process.

So the question becomes: Is your brand willing to question the status quo in design testing? The answer to that question could either mean an increase in your bottom line… or the same results as always.

Which begs another question: Does your brand want to be “as good as”... or “better than ever?”